Look into a major Antarctic Ice Stream using Mixed Reality

About

AntARctica is a Mixed Reality (XR) application developed for the Microsoft HoloLens2 and Oculus Quest2. The application currently combines the following datasets: ice-penetrating radar imagery; CSV surface measurements; and, topographical surface and bedrock digital elevation models (DEMs) of the Ross Ice Shelf in Antarctica. The radar data used were collected by the ROSETTA-Ice Project, which collected ~55,000 survey line kilometers of radar and other geophysical data.

Development for the antARctica application was inspired by the desire to elevate the Inside the Ice Shelf: Zoom Antarctica HoloLens application from 2019. The POL-AR/VR development team were confident in solving many of the limitations experienced during development and deployment of the Zoom Antarctica application. This is what led to developing antARctica for the HoloLens2 and Quest2 headsets, as the base hardware already gives a marked improvement. The superior hardware, successful data integration, and deployment of new in-app measurement tools, makes antARctica the ideal educational outreach platform and earth science analysis support tool.

Development

This application was developed by: Sofía Sánchez-Zárate, Ben Yang, Shengyue Guo, Carmine Elvezio, Qazi Ashikin, and Joel Salzman; with support from Alexandra Boghosian, Steven Feiner, Isabel Cordero, Bettina Schlager, and Kirsty Tinto. The application successfully represents the following integrated datasets in geospatial context: ROSETTA-Ice Project ice-penetrating radar images, Ross Ice Shelf surface and bedrock DEMs, MEaSURES velocity DEM, and CSVs of surface and ice thickness measurements derived from ROSETTA radargrams.

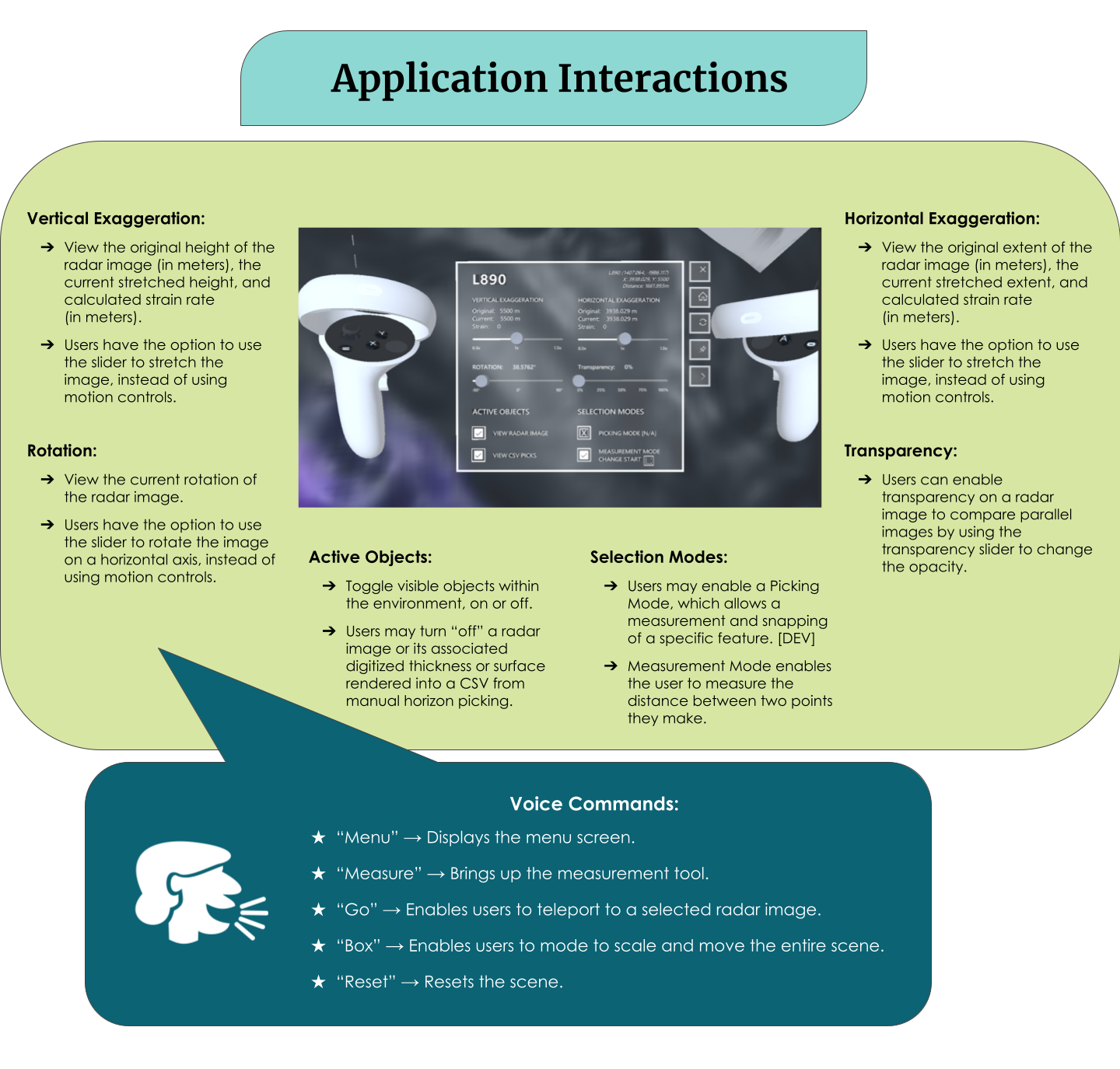

The in-app user interfaces are quite robust and are specifically developed with scientific analysis in mind. Each radargram has a fully interactable menu, which includes: vertical and horizontal stretching sliders, strain calculator, transparency slider, rotation slider, toggleable measurement tool, toggleable snapping tool, object toggling, reset option, home menu option, and close option. This radargram menu opens upon interaction with each radar image, and refreshes with the current settings on an individual radar image basis. Additionally a Home menu was developed with a mini-map of the Ross Ice Shelf to allow overview, showing the user’s current location. From this Home menu, the user can adjust the size of the environment, teleport around the map, and reset the application.

AntARctica was specifically designed for Mixed Reality platforms for several reasons. The Microsoft HoloLens2 (an AR headset) allows the user to remain in their familiar environment, which can ease known side effects of VR exposure. AR also allows a scientist to view data in the application, while simultaneously generating analysis on a separate device, without needing to remove themselves from the application environment. The HoloLens2 does not have controllers or handheld devices, and encourages the development of hand gesture tracking and eye tracking. This allows the user to interact with the application in a more natural way, using their own hands.

The Meta Quest2 (a VR headset) allows users to completely immerse themselves in the application environment. The users’ physical environment (i.e. clutter, poor lighting, outdoors) will not impact the visual experience within the headset. Additionally the internal hardware and capabilities of the VR headset can allow the user to view the application (and data within the application) in higher resolution. While both headsets can still be used tethered to a machine running the application environment, the Meta Quest2’s internal hardware is better suited to larger scale applications.

Limitations

AntARctica encountered similar limitations to Inside the Ice Shelf: Zoom Antarctica, with regards to the sheer size of the ROSETTA-Ice Project’s radar data. The data itself are large file sizes, and the images rendered for the application are anywhere from 300-600dpi. The solution was to focus the dataset on a subset of radar focussed on a feature of interest – a near-basal horizon inside the ice.

This feature, the Beardmore Basal Body (BBB), had been digitized along the flow of a major outlet glacier along the Trans-Antarctic Mountains Range. This allowed us to reduce the amount of rendered radar images significantly. This resulted in reduced lag in the HoloLens2 and Quest2 environments, particularly when running un-tethered to an external machine. An additional limitation for the HoloLens2 was accurately developing the gesture and voice controls. While both headsets struggled with voice controls, the Quest2’s handheld controllers were easier to use when manipulating the radar images.

Conclusion

The ongoing development of the AntARctica application is proof of how well these devices can be used to support educational outreach and scientific research. The application was demo’ed at Lamont-Doherty Earth Observatory’s Annual Open House in 2022 and the American Geophysical Union’s 2022 Fall Meeting, to a resounding success. Scientists and interested parties alike were able to engage with the dataset, make measurements and interpretations, in real time while learning about the ice shelf. Prior to these demonstrations, a researcher interested in the Beardmore Basal Body was able to discover an additional instance of the feature from looking at a segment of radar within the application. This then allowed them to make an additional digitization of the feature and improve their research resolution.

Future work for this application includes developing networking for multiple users to interact with the same environment.

Benjamin Yang and Shengyue Guo are 2022 AGU Michael H. Freilich Student Visualization Competition Winners for their work on AntARctica.

Read more about AntARctica:

- AntARctica: An Immersive 3D Look into Antarctica’s Ice Using Augmented Reality and Virtual Reality

by Alexandra Boghosian, S. Isabel Cordero, Kirsty J Tinto, Steven Feiner, Robin E. Bell, Benjamin Yang, Shengyue Guo. AGU 2022 Fall Meeting Poster IN32B-0394 - Forked Repository for antARctica: AR visualizer for ice-penetrating radar data on Antarctica's Ross Ice Shelf by Sofía Sánchez-Zárate, Shengyue Guo, Ben Yang, Qazi Ashikin, and Joel Salzman (2022) via GitHub

- Repository for antARctica: AR visualizer for ice-penetrating radar data on Antarctica's Ross Ice Shelf by Sofía Sánchez-Zárate and Shengyue Guo (2021) via GitHub